July 8, 2023

The Hidden Secret to Accelerate the Reading Efficiency with ChatGPT

![]() Paul

Paul

How large language models like ChatGPT will impact our reading and knowledge acquisition habits? Will there be new tools and paradigms for assisted reading comprehension emerging?

This article is the second in a series of discussions and research regarding the above questions. The previous article can be found here. It is important to note that this is an ongoing process of hypothesizing, studying, and validating, and the views presented here are solely based on my stage-specific thinking, which subject to change in the future. This information is provided for reference purposes only, and we welcome for further discussions.

The FoldSum chrome extension that mentioned in this article can be directly downloaded and installed from the Chrome Web Store.

TL;DR

- Guiding ChatGPT to generate content summaries in various formats can control the information density and content compression strength.

- By compressing paragraphs into lists and then converting lists back into paragraphs, we can achieve a recursive enhancement of reading efficiency for long articles.

- The output of large model summaries may produce anomalous results, making them better suited as collaborative reading tools (copilots) rather than fully automated tools.

Information Density and Content Compression

Before we go any further, some basic concepts need to be introduced. Summarizing is a form of content compression that enhances information density by reducing the word count, eliminating secondary information, and retaining key points. The degree of compression can vary — the stronger the compression, the more words are deleted, and the less information is retained.

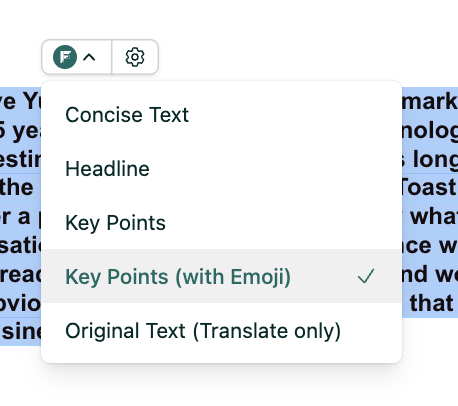

With this logic in mind, we has defined three forms of summaries, sorted from strong to weak in terms of content compression:

- Title (Headline)

- Paragraph (Concise Text)

- List (Key Points)

Title (Headline)

For the Headline summary, FoldSum uses prompts like this to guide ChatGPT:

“Your task is to analyze the following text and extract the most important and valuable information in one headline title. The title should be clear, concise, and intriguing, avoid using clickbait or misleading phrases.”

The advantage of a headline is its concise form, which facilitates rapid understanding. However, due to the constraints of the form, some detailed information may be lost.

Paragraph (Concise Text)

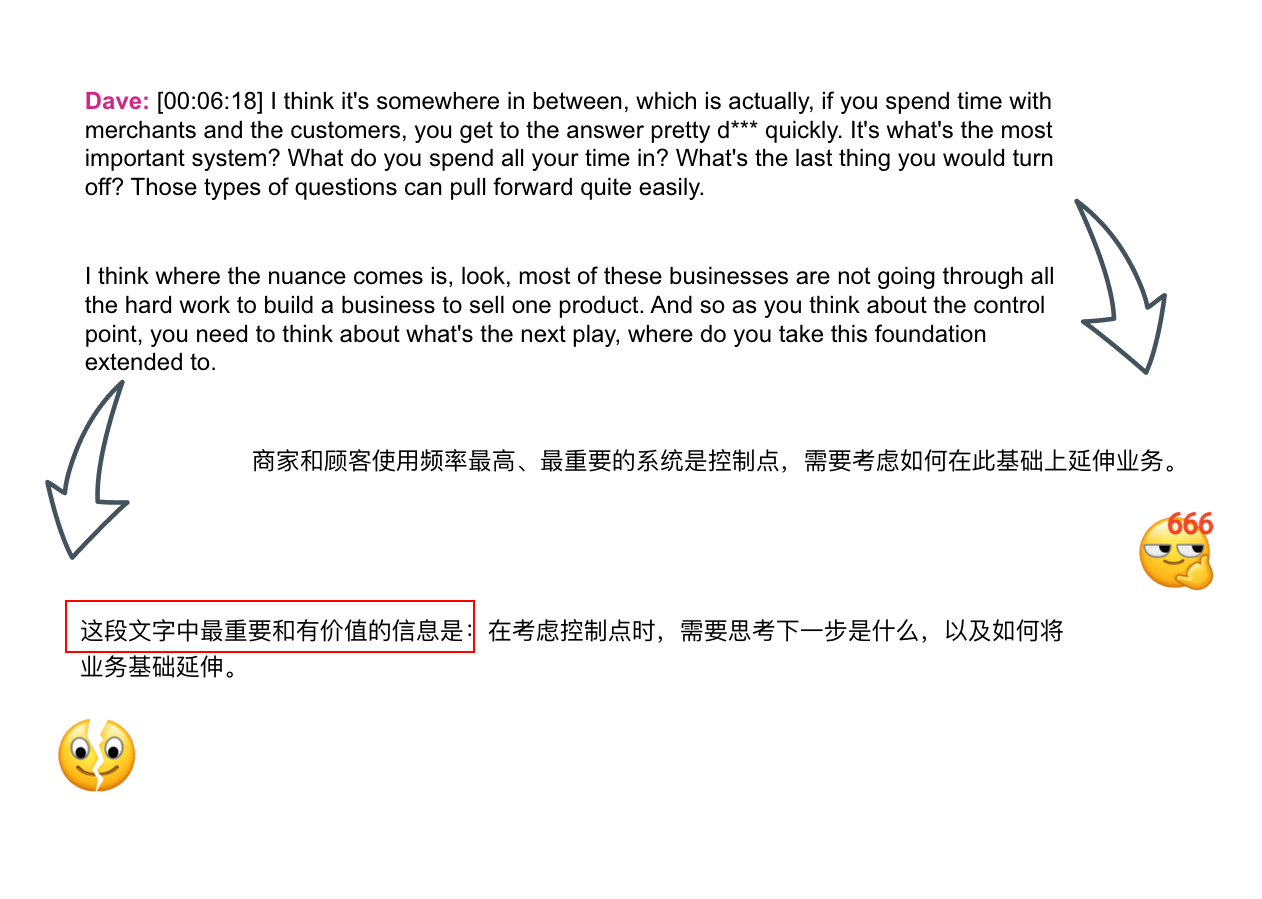

For the Concise Text summary, FoldSum uses prompts like this to guide ChatGPT:

“Your task is to analyze the following text and extract the most important and valuable information in one paragraph as concise as possible.”

This form can accommodate more information and is suitable for refining longer text content.

List (Key Points)

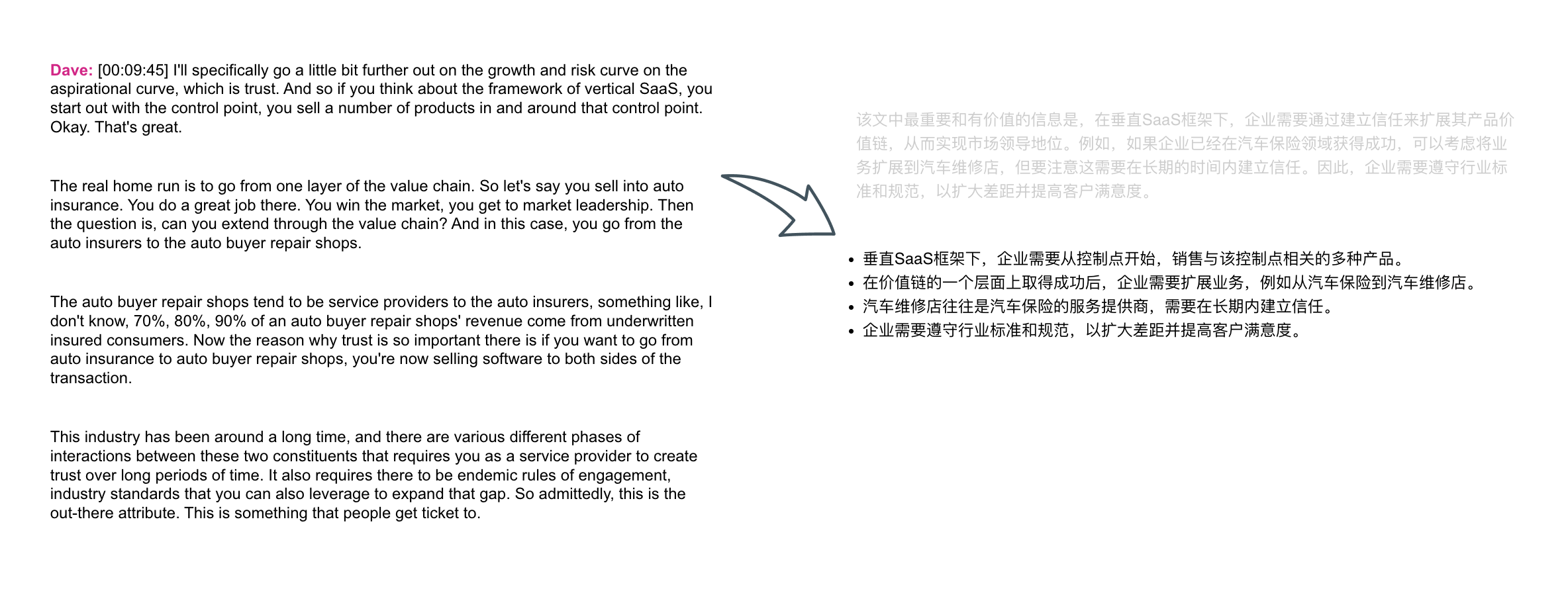

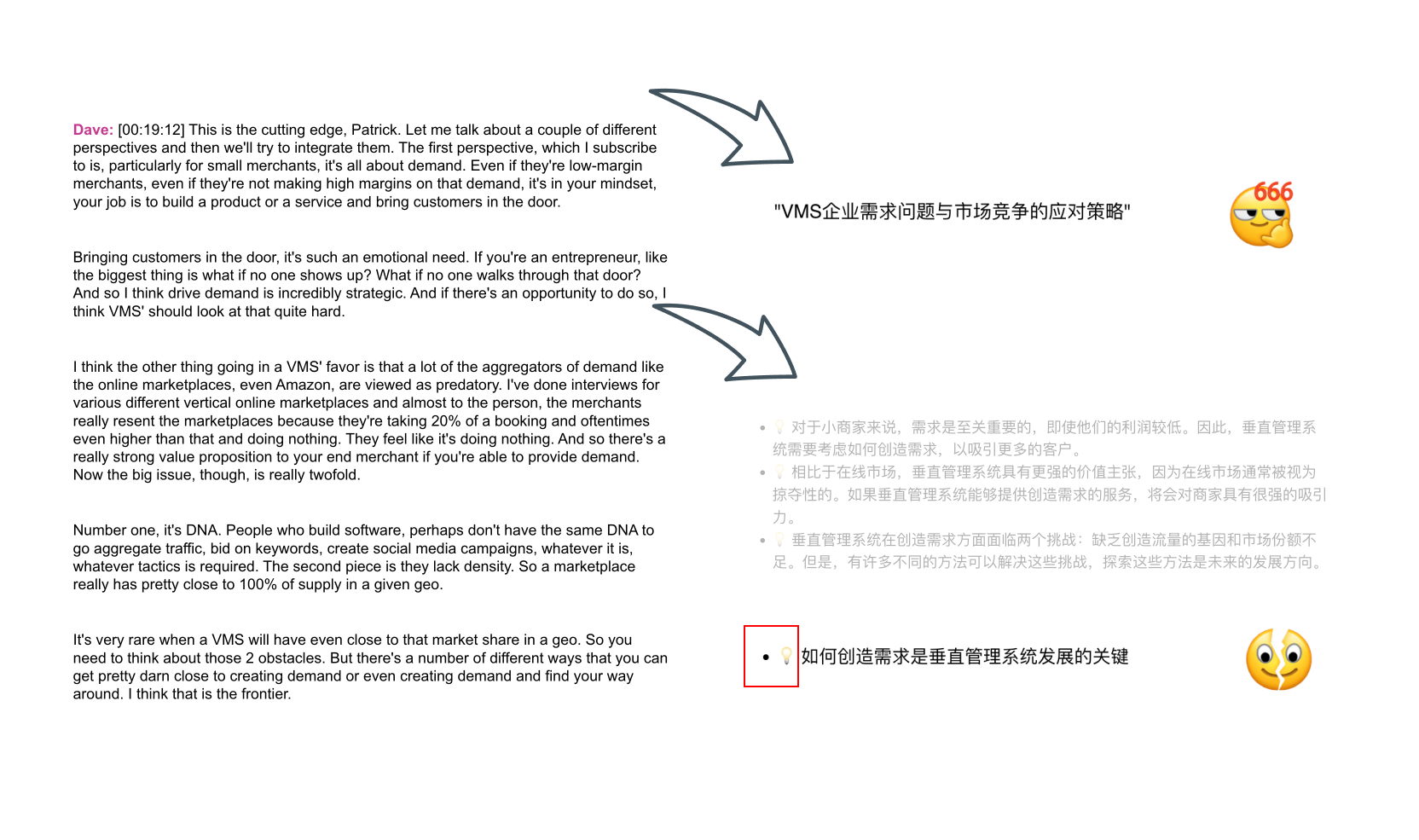

For the Key Points (List) summary, FoldSum uses prompts like this:

”Your task is to analyze the following text and extract key takeaways in bullets. Ensure that each key takeaway should be a list item, of the following format: ‘- [takeaway 1]‘”

For further assistance in understanding the content, FoldSum support another format that outputs a relevant emoji before each list item:

“Your task is to analyze the following text and extract key takeaways in bullets. Ensure that each key takeaway should be a list item, of the following format: ‘- [relevant emoji][takeaway 1]’ Keep emoji unique to each takeaway item. Please try to use different emojis for each takeaway.”

This emoji list format appears more interesting and is the preferred format for summarizing long texts.

Accelerating Reading of Long-Form Texts

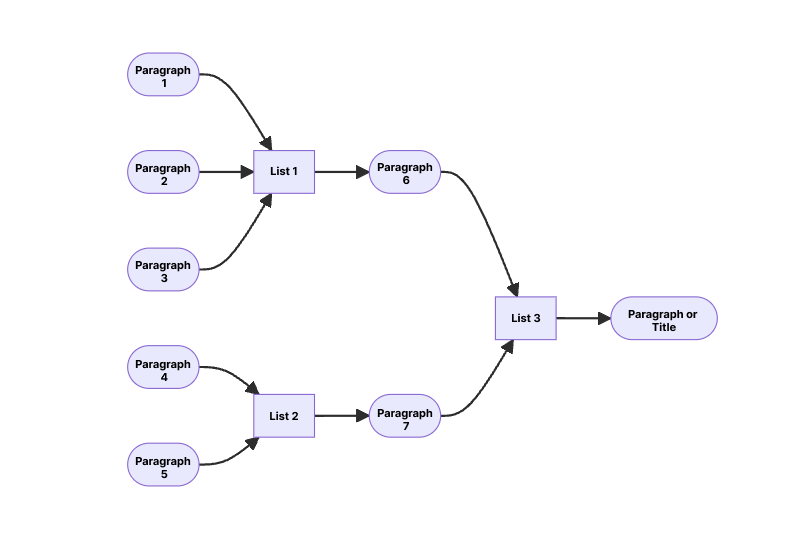

By combining these three basic units of content compression, we can conveniently speed up reading for long articles.

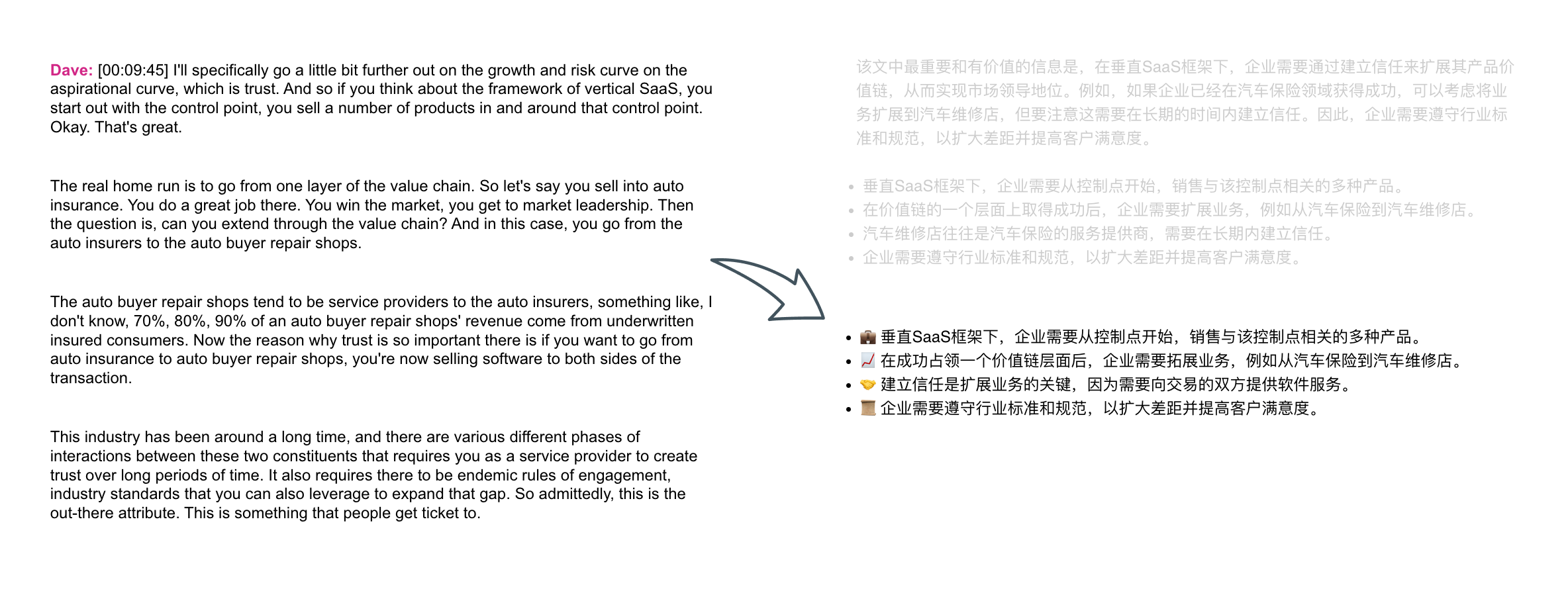

The pattern is to first compress the original paragraphs of the article into multiple lists, then compress each list into a paragraph. This operation is repeated in a recursive cycle, eventually compressing an entire article into a paragraph or even a headline.

A Demo

We did a small test using a podcast transcript. Taking approximately the first 2,000 words, the audio lasts around 11 minutes. The process involves understanding the meaning of each question and answer, followed by a secondary comprehension of folding, and ultimately generating the final list (including the title), which takes about 4 minutes in total. In comparison, the average reading speed for adults is approximately 200-300 words per minute. With the additional time needed to digest and comprehend the content, the reading efficiency can be improved by at least 100%.

Output Quality by Large Language Models

In most cases, the output content of large language model is as expected. However, there are exceptions. For example, even with output guidelines and format restrictions at the end of the prompt, the generated summary may still have unnecessary prefixes like “The main content of this article is:”

Therefore, it is better to view these large language models as collaborative reading tools (co-pilot), accepting that their outputs are not 100% as expected.

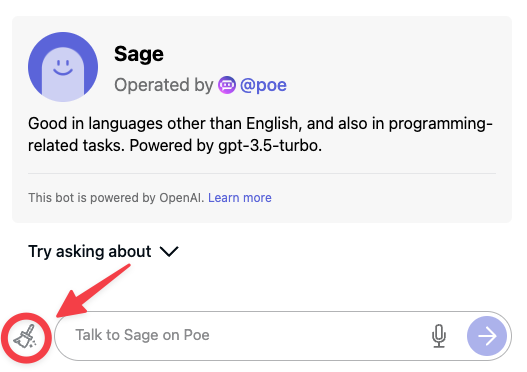

Furthermore, ChatGPT and POE, in chat mode, may be affected by the history of the chat. Even when the prompt explicitly instructs to “ignore previous instructions,” the content output may still be affected by previous histories.

When you notice the output being affected by previous content, it’s best to use the default method provided by the official to clear the context. For example, for ChatGPT, you can start a new chat window; for POE, you can press the clear context history button. This ensures that the following instructions are not affected by the previous content.

Conclusion

Large language models like ChatGPT offers exciting new possibilities for enhancing our reading and comprehension experiences. New tools such as FoldSum are emerging, enabling more efficient content consumption and collaboration, even as they continue to evolve and improve.

Feedback? Suggestions? Features you'd like to see? Contact us!

Copyright © 2023 All Rights Reserved